Playing With Lives – English GCSE Analysis

Yesterday hundreds of thousands of 16 year olds opened an envelope to find out the fruits of the first 11 years of hard work at school. College courses, apprenticeships, jobs and more rested on the grades they had been given in their GCSE exams. The night before they were already panicking that something might be wrong with their English results.

Schools receive exam results the day before so that we can analyse them, print out the all important results and stuff them in envelopes etc.. Access us usually restricted to a handful of school leaders and the data / exams team. All is quiet until Thursday morning. But as we know now Wednesday was different, Twitter and the TES Forum were alive with worry and disgust at many of the English exams. The press picked up on this in the evening and hit front pages and breakfast news before the children had even had chance to find out their own results.

So what exactly has happened? Grade boundaries were changed between the January sitting and the June sitting. But due to the way the courses are constructed it’s more complicated than that. No apologies for the in-depth analysis here – I think it’s needed, I hope it’s useful for other schools, I hope the exam boards and Ofqual can respond. If there are errors in my understanding / logic / calculations then please let me know. This analysis looks at AQA’s English Language exam, as that’s the one we do, it’s also the English exams sat by 62% of the country this year.

A bit of background: There are three English exams from AQA (as with all boards). There is English (4700) which is mainly taken by lower ability students as it removes the need to sit the Literature aspect but still counts towards league tables. Alongside this there are English Language (4705) and English Literature (4710). Whilst it’s only the English Language part that counts as ‘English’ in the performance tables (5A*-C with E&M etc) a student has to have sat the Literature paper for it to count. Each exam is also split into a Foundation (grades C-U) and Higher (grades A*-D) tier, just to confuse matters.

The results for the 4700 and 4705 differ greatly, the 4700 English exam had only 31% C+ passes, however this is to be expected due to the nature of the students being entered for that paper. 4705 English Language was 75% C+. This works out at a combined total pass rate of 63.7% for English as a whole with AQA, neatly just below the national figure of 64.2% – just where Ofqal wanted it. Remember that figure it will be useful later, but bear in mind that these figures show the total for those students who sat the exams (and entered controlled assessments) both in the January and June sittings.

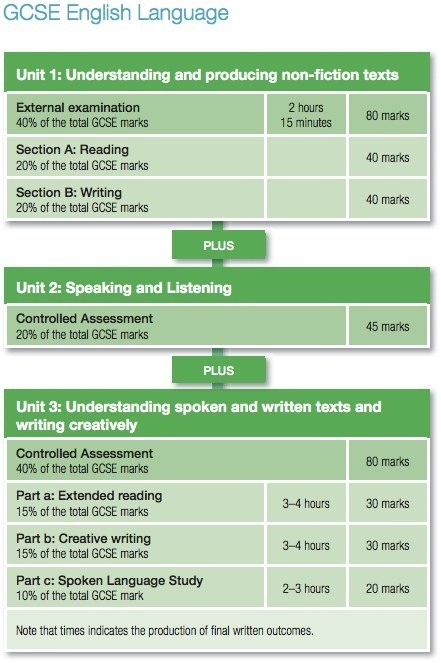

English Language (4705) is made up of 3 component Units. See diagram from AQA:

Schools can ‘cash in’ these units at any point during the course, and this is where the key issue and scandal is present. The grade boundaries for all three of these units have changed from January entries to June.

The Exam:

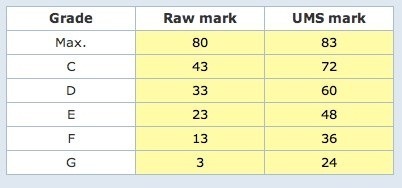

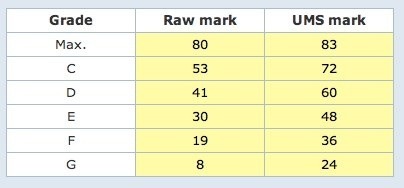

Unit 1 is an exam, and each exam is different so you would expect to see a small change in grade boundaries from one exam to the next depending on how hard it is and to a degree how well all the students have done on it. This happens with every exam board and every subject and is not a problem. However this year something fairly extreme happened here, look at these two tables from Edexcel:

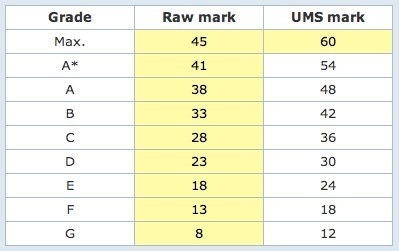

Unit 1 January:

Unit 1 June:

The raw mark required to gain a grade C has moved from 43/80 to 53/80. This is a 12.5% shift (For the Higher Tier it was 41/80 to 43/80). Now the exam in June could just have been poorly written and pupils found it incredibly easy, requiring a shift upwards of the boundaries. At my school we entered most students in both January and June for the written exam. It was a new spec and we wanted an accurate gauge of their progress. I compared the average UMS mark (you’ll see that this is standardised so that a C is always the same – it allows comparison from one different exam to another) of our pupils who sat the same paper in both sittings.

Our Foundation Tier students gained 2 UMS Marks (out of 120) on average from January to June. This isn’t much of an improvement considering the amount of highly targeted intervention we put in. However it does show that the 10 mark grade boundary shift wasn’t entirely uncalled for. (Although you could argue it was poor to produce two exams of such differing difficulty).

Our Higher Tier students lost 10 UMS Marks. They apparently did 8% worse in their Summer exam after an extra 6 months of hard work. This can’t be right, and we’re fortunate in that because they sat the paper twice they will have taken forward their best result, for many this was from their January paper. This implies that the mark boundary on the Higher Tier should actually have fallen from January to June. Or it implies AQA completely messed up with their boundaries in January, making it way to easy to get a C. I suspect it was a bit of both.

The Controlled Assessments:

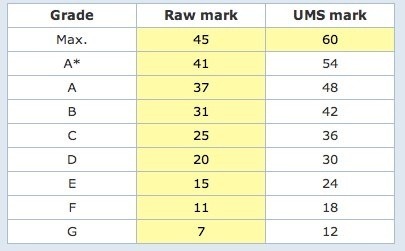

There were two controlled assessments as detailed above. These replace what used to be coursework. They are completed in exam conditions within the classroom, marked by the teachers and then moderated by an AQA assessor. The tasks remain the same throughout the two year course and they can be ‘cashed in’ at any point in the two years. So unlike the exams it only stands to reason that if a piece of CA is submitted in January it should gain exactly the same mark as if it were submitted in June. It is after all identical. This didn’t happen.

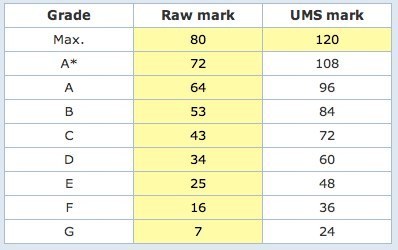

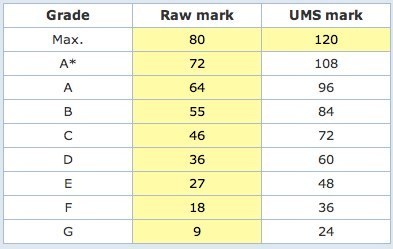

The grade boundaries for these changed from January to June. Here are the figures:

Unit 2 January:

Unit 2 June:

Unit 3 January:

Unit 3 June:

See what happened there? Same child, same work, different submission date, harder to get a C. That’s simply unfair on individual children and also on schools.

Between the two controlled assessments it takes an extra 6 marks out of 125 to get a C.

So a school that had completed all the controlled assessments and submitted them in January will have performed better than one who had completed them but held off to submit everything at the end of the course. And individual students will have received worse grades because of this submission date which was out of their control and was unforeseeable to the schools.

The goalposts were indeed moved, and made smaller, and that’s simply unfair.

The Impact:

By my calculations 15 students at our school would have received a grade C instead of a grade D if their controlled assessments were measured against the January boundaries. And as a school we would have increased our 5A*-C with English & Maths percentage by 5 points instead of seeing it drop by 2. That’s huge for the 15 students (more also dropped in other grades) and it’s huge for us. We’re expecting Ofsted later this year and may now struggle to get another Outstanding judgement as pupil progress is a limiting factor. Parents will judge us on that figure when considering whether they should send their child to our school. This would have been far worse if we hadn’t entered students for the exam in January and will be why certain school have been particularly badly affected (we’d have lost another 8 Cs).

Causes:

Political pressure could have been involved, I doubt we’ll ever know. Ofqal’s insistence that overall grade ‘inflation’ should stagnate this year has more to do with it.

But my gut feeling is that AQA made a horrible mess back in January and have now attempted to fix it with all the finesse and honesty of a heavyweight boxer.

Remember 75% of students still achieved a C+ in this examination. But we don’t know how they were split between January and June. My suspicions are that AQA messed up the January grade boundaries. It may have been too easy to get a C back then.

Wind that forward to June and with similar grade boundaries it stands to reason that AQA were about to award 80%+ a C in English Language. This would have blown Ofqal’s grade inflation requests out of the water. So to counter that they have raised all the grade boundaries, making it much harder to get a C in June. And as we’ve discussed, with regards to the controlled assessments, that is completely immoral.

This is a simplistic example but will do for illustration…. Using January boundaries we missed 10% of our C+s in English. If this happened in every AQA English sitting school there would have been an extra 38,000 Grade Cs in England. That would bump the total to 420,000 nationwide, resulting in 68.7% C+ grades, an ‘inflation’ of 3% and a very unhappy Ofqal & Mr Gove.

January was a mess. AQA fixed it with an biased sledgehammer.

What next?

If this hypothesis is correct then I think the entire 2012 results need to be reassessed fairly by a third party. If boundaries from January need to be made harder then so be it. But individual students cannot be penalised for the arbitrary date of their controlled assessment submission.

Schools should contest these results. Possibly as a whole.

AQA should issue a clear statement explaining exactly what has happened. A breakdown of results comparing January to June would be interesting.

Ofsted and co need to be very careful about their use of league table comparison figures this year due to the fact that they now cannot be used to compare one school fairly with another.

If you want to use my spreadsheet that calculates the different grades a pupil would have achieved based on the submission date of CAs then please feel free. You need to enter UMS marks in the relevant yellow cells. (I set up a marksheet in SIMS and then exported to a spreadsheet – you may wish to do the same). If you spot any errors in my calculations or logic please let me know. It’s set up for the English Language, but could be easily change to the straight English course if you change the lookup on sheet 2 from ENL01 to ENG01 details.

EDIT: Something of a response here from AQA. Interesting that 94% of CA was submitted in June. Which puts a slightly different slant on it but still means that the 6% entered early were at a huge advantage as per above. And no comment on the difference in difficulty between the Unit 1 exams. Did our pupils really get 10% worse in the last 6 months? Still stand by my assumption that things were too ‘easy’ in January, too ‘hard’ in June. Unfair.